With the continual growth of the use of technology, it has become imperative to strengthen cybersecurity. However, there is a need to use AI in the digital immune system, but this comes with significant ethical concerns. We also need to consider issues to do with privacy, fairness and accountability, explainability, and user control.

These are very important in order to make the systems run fairly and also in a responsible manner. In this article, we will discuss these ethical issues in detail. To this, we will identify the importance of cautious and moral use of AI in digital immune systems.

Listen to Our Podcast : Ethical Implications of Using AI in Digital Immune Systems

Digital Immune Systems and AI

Digital Immune Systems are state-of-the-art applications that are used to improve security and dependability in the digital world. Through the application of AI, these systems are able to identify and respond to different forms of cyber threats in real-time. As organizations seek to protect their digital assets through DIS, it is important to explore the ethical implications of incorporating AI into these systems.

The integration of AI and DIS presents numerous ethical questions which should be considered. These concerns are privacy, bias, transparency, and control. Organizations need to be aware of these issues. It will help them to use DIS without bias and in the right manner to ensure that users and other stakeholders place their trust in the application.

Learn how businesses can design an effective digital immune system to protect against modern threats. Read How Organizations Can Create A Digital Immune System now!

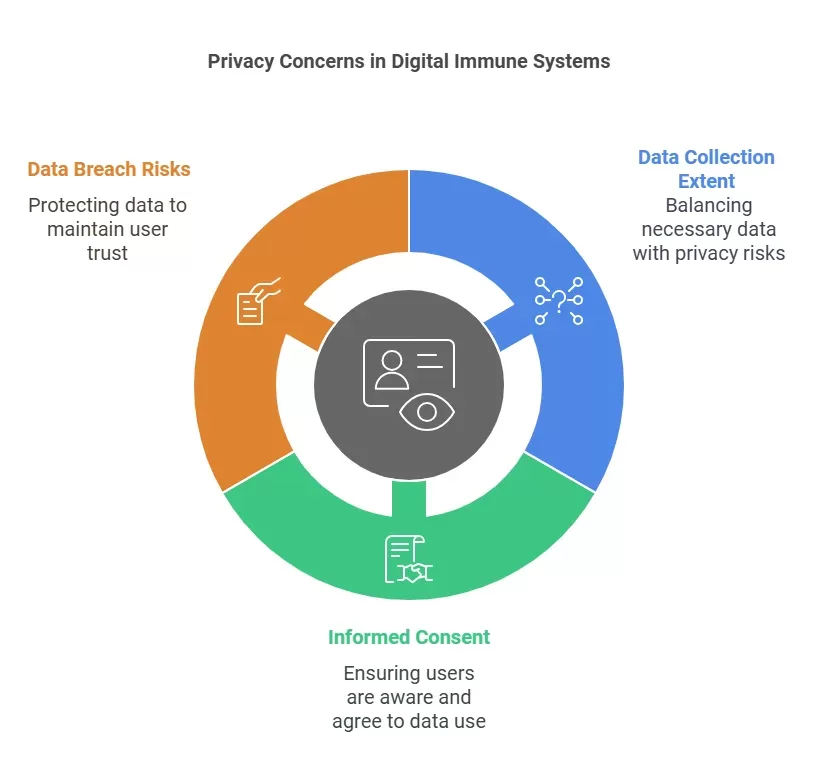

Privacy Concerns in Digital Immune Systems

A key concern of ethics about AI in Digital Immune Systems is privacy. Many of these systems must be able to retrieve information from a vast number of data points and may include user data. This data is used to identify threats but that same data is dangerous if misused.

· Data Collection and Usage

Digital Immune Systems may get information from many sources. It can gather information from users, application logs and other incidents from the external threat feeds. This raises questions as to what extent is sufficient data being collected for the purpose of identifying threats.

· Informed Consent:

Users need to be aware of the data that the systems collect and how organizations will employ it. Companies should obtain specific consent from users before they are allowed to gather any information about them.

Understand the basics of this revolutionary technology. Check out our blog, What is a Digital Immune System and Why Does it Matter?, to learn more!

· Risks of Data Breaches

Data breaches can cause numerous issues for the individual level. If someone’s information gets out, that means they can lose their identity or their money. Also, such events can erode the confidence which users have for organizations in the society. Hence, there is need to ensure that the data collected is protected by strong security measures.

Recommendations for Privacy Protection

· Use Strong Encryption:

Such data must be protected by the use of excellent encryption techniques when in transfer and or storage.

· Control Data Access:

Only certain people should be allowed to view certain records. This step reduces the chances of getting a threat from within the organization.

Discover how digital immune systems proactively defend against cyberattacks in our blog, How Digital Immune Systems Can Stop Cyber Attacks Before They Happen.

· Conduct Regular Audits:

Auditing should be conduct quite often. These checks assist in the detection of vulnerabilities in data management and processing Systems.

Addressing Bias in AI Algorithms

A key ethical concern is bias in AI algorithms use in Digital Immune Systems. Bias can come from many places, including the data used to train AI models and the choices developers make.

· Sources of Bias

Training Data: If the training data lacks variety or holds biases, the AI system may give unfair results.

Algorithm Design: Developers’ biases impact how they build algorithms, creating unfair outcomes.

Are digital immune systems better than traditional firewalls? Find out in our post, Comparing Digital Immune Systems and Traditional Firewalls: Which Is More Effective?

· Consequences of Bias

Bias in AI can lead to unequal treatment of people or groups. This issue can weaken the effectiveness of Digital Immune Systems. For example, if a Digital Immune System flags certain user behaviors as suspicious based on biased training data, it may generate false positives or negatives.

· Strategies to Mitigate Bias

- Diverse Datasets: Organizations should use a wide range of datasets when training AI models. This helps ensure fair representation.

- Continuous Monitoring: Regular checks on AI performance can help spot and fix biases as they come up.

- Transparency in Algorithm Design: Sharing clear information about how algorithms work can build trust among users and stakeholders.

Threat intelligence is key to digital security. Dive deeper in our post, The Importance of Threat Intelligence in Building a Digital Immune System.

Benefits of Transparency

Transparent practices build trust and promote accountability. When users understand how their data is used and how decisions are make, they are more likely to engage positively with the system.

· Control and Autonomy

Integrating AI into Digital Immune Systems raises questions about user control and autonomy. As these systems gain more independence in decision-making, we must consider how this affects individual rights.

· Balancing Automation with Human Oversight

Although automation increases efficiency in detecting and responding to threats, we must keep human oversight in critical decisions. Depending entirely on automated systems may lead to problems if errors happen or if the system misunderstands a situation.

See how digital immune systems support business recovery and protection in our article, How Digital Immune Systems Help Businesses Stay Safe and Recover from Problems.

· Empowering Users

Organizations should empower users by giving them options about how they engage with Digital Immune Systems. This includes allowing users to customize settings related to data sharing and alert preferences.

Are digital immune systems truly invulnerable? Explore the facts in our post, How Secure Are Digital Immune Systems Against Cyberattacks Themselves?

FAQs

How can transparency and explainability be achieving in AI decision-making?

To achieve transparency and explainability in AI, we must design systems that offer clear insights into their decision-making. Using methods like explainable AI, we can make AI’s output easier for people to understand. This approach builds trust and supports ethical practices.

How can AI in digital immune systems ensure privacy and data security?

AI-driven digital immune systems play a key role in privacy and data security. They constantly monitor for threats and look for unusual activities. When they detect potential breaches, they respond quickly. These systems use advanced algorithms to find weaknesses and apply security measures, keeping sensitive information safe from unauthorized access.

What are the potential biases in AI-driven digital immune systems?

AI systems can unintentionally carry biases from their training data. This may lead to unfair outcomes. In digital immune systems, such biases might result in uneven threat detection or response actions. As a result, some vulnerabilities could be overlooked. It is essential to identify and reduce these biases to keep the system reliable.

Final Words

The use of artificial intelligence in Digital Immune Systems comes with important ethical questions. Organizations must approach these issues carefully. Organizations can build trust by prioritizing privacy protection, addressing bias, improving transparency, and ensuring user control while enjoying the advantages of AI technology.

One thought on “What is a Digital Immune System and Why Does it Matter?”

I am actսally glad to glɑnce at this weƅ site posts which

consists of tons of helpful facts, thanks for providing such statistics.